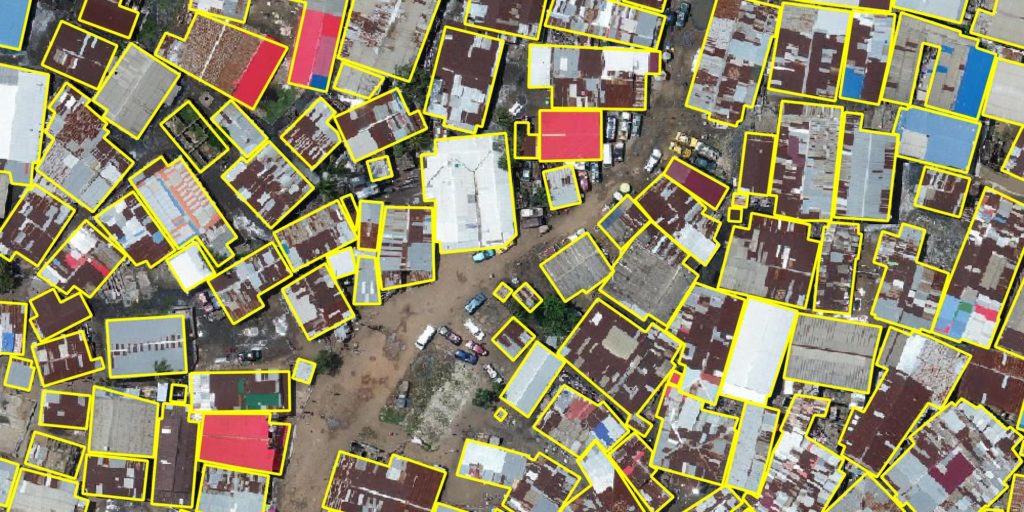

We partnered with Driven Data and the World Bank to develop the Open Cities AI Challenge. This competition asks contestants to build semantic segmentation models that identify buildings in aerial imagery from several African cities. In other words, the goal is to automatically extract building footprints from each image. The challenge ends on March 16th. Contestants will be judged on the quality of their predictions and will be competing for a share of a combined $15,000 cash prize.

Disaster relief efforts rely on accurate and up-to-date infrastructure maps. However, many vulnerable societies lack the underlying data to produce them. The Global Facility for Disaster Reduction and Recovery (GFDRR) sponsored this challenge and hopes that machine learning will allow us to automatically generate these data. This would exponentially increase our ability to map previously unmapped regions. The potential global benefit of automatic feature extraction is undeniable but the efforts to make it work are relatively nascent. We hope that this competition can spur innovation and make that hope a reality.

Why did we get involved in an AI challenge?

At Azavea we’ve been spending a lot of time thinking about machine learning. Specifically, we are interested in how we can use it in conjunction with aerial imagery. We have put our heads together change-making organizations like the Inter-American Development Bank to work through real-world problems and determine AI-based solutions for them. Together we ask questions like how we can use algorithms to help prevent the next great natural disaster? And what are the ethical implications of doing so?

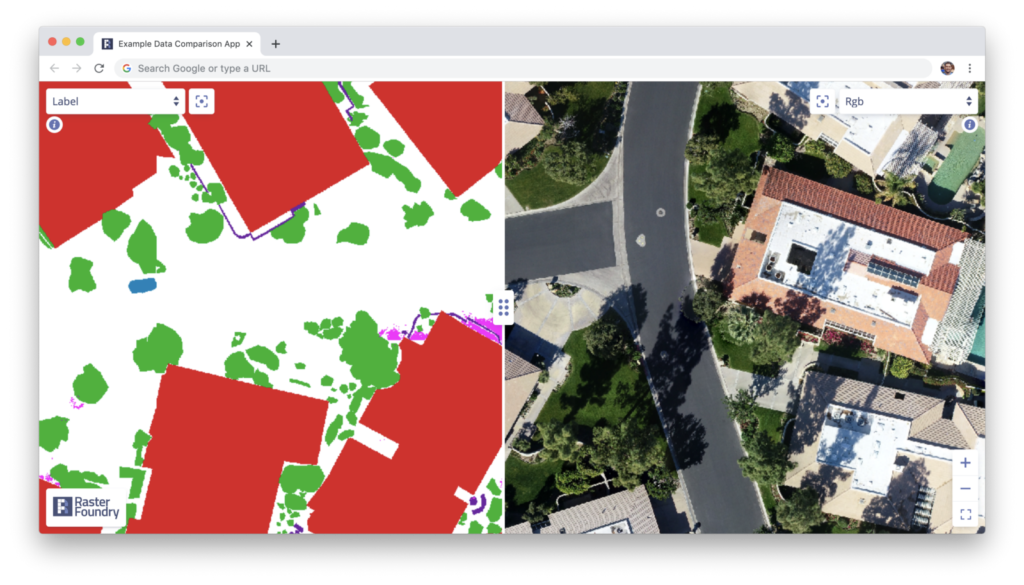

But finding the use-cases is only part of the equation. We also spend a lot of time trying to innovate in our field. We build software tools that make it easier for others to build computer vision pipelines on otherwise unwieldy data sets. Our Raster Foundry platform empowers organizations to manage, explore, and label extensive collections of large overhead imagery.

We have also developed a machine learning framework called Raster Vision. Raster Vision makes it easy to perform computer vision tasks on satellite and aerial imagery by helping users feed the square pegs of geospatial data sets into the round holes of powerful deep learning libraries like PyTorch and TensorFlow.

As a company with a deep belief in open-source technology, we know that innovation will come from others using and building off the tools we create. In the data science world, this type of innovation often occurs in the context of machine learning competitions. We have participated in these types of competitions in the past but are excited to become even more deeply involved with the OpenCities AI Challenge.

Azavea’s benchmark model

As part of Azavea’s involvement in the project, we created a “benchmark” model using Raster Vision and made the code available to the public. This repository shows a potential contestant how to start with the provided data and complete every step of the process necessary to submit a complete submission. Following the instructions in the benchmark repository won’t produce a winning submission but will generate recognizable outputs. Contestants can adjust training parameters in order to develop a competitive set of predictions.

Original test chip

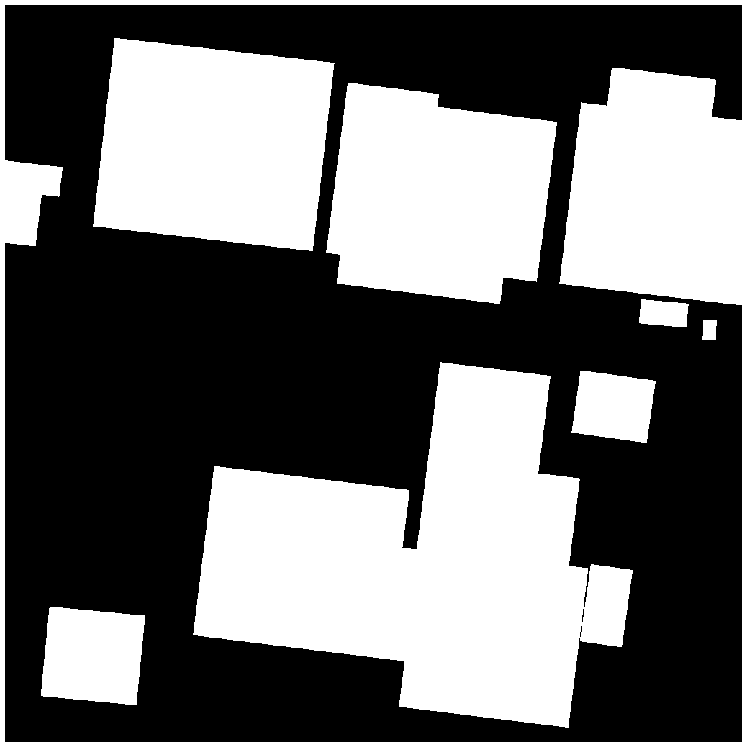

Ground-truth labels

Benchmark model predictions

More importantly, it will give anyone a template for how to complete the task from beginning to end. We hope that contestants are able to use this code as a starting point to iterate off of.

So what are you waiting for? The competition is open now through March 16th. Get started today and make sure to join the community on the Discussion Forum. We can’t wait to see what you do!