At Azavea, we use Amazon Elastic MapReduce (EMR) quite a bit to drive batch GeoTrellis workflows with Apache Spark. Across all of that usage, we’ve accumulated many ways to provision a cluster.

At Azavea, we use Amazon Elastic MapReduce (EMR) quite a bit to drive batch GeoTrellis workflows with Apache Spark. Across all of that usage, we’ve accumulated many ways to provision a cluster.

- Bash scripts driving the AWS CLI

- Python code using the Boto 3 EMR module

- JSON/YAML templates submitted to CloudFormation

Missing from that list is Terraform, a tool for building, changing, and combining infrastructure. Although we use Terraform across many of our projects to manage other AWS resources, we haven’t used it to manage EMR clusters because it was lacking spot pricing support for instance groups. When spot pricing support landed in an official release toward the end of September 2017, we decided to encapsulate all of the resources required to launch an Amazon EMR cluster into a reusable Terraform module.

Terraform Modules

Modules in Terraform are units of Terraform configuration managed as a group. For example, an Amazon EMR module needs configuration for an Amazon EMR cluster resource, but it also needs multiple security groups, IAM roles, and an instance profile.

$ terraform state list | grep "module.emr" | sort module.emr.aws_emr_cluster.cluster module.emr.aws_iam_instance_profile.emr_ec2_instance_profile module.emr.aws_iam_policy_document.ec2_assume_role module.emr.aws_iam_policy_document.emr_assume_role module.emr.aws_iam_role.emr_ec2_instance_profile module.emr.aws_iam_role.emr_service_role module.emr.aws_iam_role_policy_attachment.emr_ec2_instance_profile module.emr.aws_iam_role_policy_attachment.emr_service_role module.emr.aws_security_group.emr_master module.emr.aws_security_group.emr_slave

Why encapsulate all of the necessary configuration into a reusable module? Because when you’re trying to make use of EMR, you don’t care about all the complexity these companion resources bring—you care about the program you’re about to run on EMR.

Terraform Module Components

On a fundamental level, Terraform modules consist of inputs, outputs, and Terraform configuration. Inputs feed configuration, and when configuration gets evaluated, it computes outputs that can route into other workflows.

Inputs

Inputs are variables we provide to a module in order for it to do its thing. Currently, the EMR module has the following variables:

$ grep "variable" variables.tf | cut -d\" -f2 | sort applications bootstrap_args bootstrap_name bootstrap_uri configurations environment instance_groups key_name log_uri name project release_label subnet_id vpc_id

Variables like project and environment are consistent across Azavea maintained modules, and mainly get used for tags. Others, like release_label, instance_groups, and configurations, are essential for the EMR cluster resource.

Configuration

As inputs come in, they get layered into the data source and resource configurations listed above. Below are examples of each data source or resource type used in the EMR cluster module, along with some detail around its use.

Identity and Access Management (IAM)

An aws_iam_policy_document is a declarative way to assemble IAM policy objects in Terraform. Here, it is being used to create a trust relationship for an IAM role such that the EC2 service can assume the associated role and make AWS API calls on our behalf.

data "aws_iam_policy_document" "ec2_assume_role" {

statement {

effect = "Allow"

principals {

type = "Service"

identifiers = ["ec2.amazonaws.com"]

}

actions = ["sts:AssumeRole"]

}

}

An aws_iam_role resource encapsulates trust relationships and permissions. For EMR, one role associates with the EMR service itself, while the other associates with the EC2 instances that make up the compute capacity for the EMR cluster. Linking the trust relationship policy above with a new IAM role is demonstrated below.

resource "aws_iam_role" "emr_ec2_instance_profile" {

name = "${var.environment}JobFlowInstanceProfile"

assume_role_policy = "${data.aws_iam_policy_document.ec2_assume_role.json}"

}

To connect permissions with an IAM role, there is an aws_iam_role_policy_attachment resource. In this case, we’re using a canned policy (referenced via Amazon Resource Name, or ARN) supplied by AWS. This policy comes close to providing a kitchen sink’s worth of permissions (S3, DynamoDB, SQS, SNS, etc.) to the role.

resource "aws_iam_role_policy_attachment" "emr_ec2_instance_profile" {

role = "${aws_iam_role.emr_ec2_instance_profile.name}"

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforEC2Role"

}

Finally, there is aws_iam_instance_profile, which is a container for an IAM role that passes itself to an EC2 instance when the instance starts. This type of resource is only necessary when associating a role with an EC2 instance, not other AWS services.

resource "aws_iam_instance_profile" "emr_ec2_instance_profile" {

name = "${aws_iam_role.emr_ec2_instance_profile.name}"

role = "${aws_iam_role.emr_ec2_instance_profile.name}"

}

Security Groups

Security groups house firewall rules for compute resources in a cluster. This module creates two security groups without rules. Rules are automatically populated by the EMR service (to support cross-node communication), but you can also grab a handle to the security group via its ID and add more rules through the aws_security_group_rule resource.

resource "aws_security_group" "emr_master" {

vpc_id = "${var.vpc_id}"

revoke_rules_on_delete = true

tags {

Name = "sg${var.name}Master"

Project = "${var.project}"

Environment = "${var.environment}"

}

}

A special thing to note here is the usage of revoke_rules_on_delete. This setting ensures that all the remaining rules contained inside a security group are removed before deletion. This is important because EMR creates cyclic security group rules (rules with other security groups referenced), which prevent security groups from deleting gracefully.

EMR Cluster

Last, but not least, is the aws_emr_cluster resource. As you can see, almost all the module variables are being used in this resource.

resource "aws_emr_cluster" "cluster" {

name = "${var.name}"

release_label = "${var.release_label}"

applications = "${var.applications}"

configurations = "${var.configurations}"

ec2_attributes {

key_name = "${var.key_name}"

subnet_id = "${var.subnet_id}"

emr_managed_master_security_group = "${aws_security_group.emr_master.id}"

emr_managed_slave_security_group = "${aws_security_group.emr_slave.id}"

instance_profile = "${aws_iam_instance_profile.emr_ec2_instance_profile.arn}"

}

instance_group = "${var.instance_groups}"

bootstrap_action {

path = "${var.bootstrap_uri}"

name = "${var.bootstrap_name}"

args = "${var.bootstrap_args}"

}

log_uri = "${var.log_uri}"

service_role = "${aws_iam_role.emr_service_role.arn}"

tags {

Name = "${var.name}"

Project = "${var.project}"

Environment = "${var.environment}"

}

}

Most of the configuration is straightforward, except for instance_group. instance_group is a complex variable made up of a list of objects. Each object corresponds to a specific instance group type, of which there are three (MASTER, CORE, and TASK).

[

{

name = "MasterInstanceGroup"

instance_role = "MASTER"

instance_type = "m3.xlarge"

instance_count = "1"

},

{

name = "CoreInstanceGroup"

instance_role = "CORE"

instance_type = "m3.xlarge"

instance_count = "2"

bid_price = "0.30"

},

]

The MASTER instance group contains the head node in your cluster, or a group of head nodes with one elected leader via a consensus process. CORE usually contains nodes responsible for Hadoop Distributed File System (HDFS) storage, but more generally applies to instances you expect to stick around for the entire lifetime of your cluster. TASK instance groups are meant to be more ephemeral, so they don’t contain HDFS data, and by default don’t accommodate YARN (resource manager for Hadoop clusters) application master tasks.

Outputs

As configuration gets evaluated, resources compute values, and those values can be emitted from the module as outputs. These are typically IDs or DNS endpoints for resources within the module. In this case, we emit the cluster ID so that you can use it as an argument to out-of-band API calls, security group IDs so that you can add extra security group rules, and the head node FQDN so that you can use SSH to run commands or check status.

$ grep "output" outputs.tf | cut -d\" -f2 | sort id master_public_dns master_security_group_id name slave_security_group_id

Module Configuration

Creating an instance of a module is simple once the module source is up on GitHub or the Terraform Module Registry. But, before instantiating the module, we want to make sure that our AWS provider is properly configured. If you make use of named AWS credential profiles, then all you need set in the provider block is a version and a region. Exporting AWS_PROFILE with the desired AWS credential profile name before invoking Terraform ensures that the underlying AWS SDK uses the right set of credentials.

provider "aws" {

version = "~> 1.2.0"

region = "us-east-1"

}

From there, we create a module block with source set to the GitHub repository URL of a specific version (Git tag) of the terraform-aws-emr-cluster module.

module "emr" {

source = "github.com/azavea/terraform-aws-emr-cluster?ref=0.1.1"

name = "BlogCluster"

vpc_id = "vpc-2ls49630"

release_label = "emr-5.9.0"

applications = [

"Hadoop",

"Ganglia",

"Spark",

]

configurations = "${data.template_file.emr_configurations.rendered}"

key_name = "blog-cluster"

subnet_id = "subnet-8f29485g"

instance_groups =

[

{

name = "MasterInstanceGroup"

instance_role = "MASTER"

instance_type = "m3.xlarge"

instance_count = "1"

},

{

name = "CoreInstanceGroup"

instance_role = "CORE"

instance_type = "m3.xlarge"

instance_count = "2"

bid_price = "0.30"

},

]

bootstrap_name = "runif"

bootstrap_uri = "s3://elasticmapreduce/bootstrap-actions/run-if"

bootstrap_args = ["instance.isMaster=true", "echo running on master node"]

log_uri = "s3n://blog-global-logs-us-east-1/EMR/"

project = "Blog"

environment = "Test"

}

Besides the EMR module, we also make use of a template_file resource to pull in a file containing the JSON required for EMR cluster configuration. Once retrieved, this resource renders the file contents (we have no variables defined, so no actual templating is going to occur) into the value of the configurations module variable.

provider "template" {

version = "~> 1.0.0"

}

data "template_file" "emr_configurations" {

template = "${file("configurations/default.json")}"

}

Finally, we want to add a few rules to the cluster security groups. For the head node security group, we want to open port 22 for SSH, and for both security groups we want to allow all egress traffic. As you can see, we’re able to do this via the available module.emr.*_security_group_id outputs.

resource "aws_security_group_rule" "emr_master_ssh_ingress" {

type = "ingress"

from_port = "22"

to_port = "22"

protocol = "tcp"

cidr_blocks = ["1.2.3.4/32"]

security_group_id = "${module.emr.master_security_group_id}"

}

resource "aws_security_group_rule" "emr_master_all_egress" {

type = "egress"

from_port = "0"

to_port = "0"

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

security_group_id = "${module.emr.master_security_group_id}"

}

resource "aws_security_group_rule" "emr_slave_all_egress" {

type = "egress"

from_port = "0"

to_port = "0"

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

security_group_id = "${module.emr.slave_security_group_id}"

}

Taking the Module for a Spin

Once we have all the configuration above saved, we can use Terraform to create and destroy the cluster.

$ tree . ├── configurations │ └── default.json └── test.tf

First, we assemble a plan with the available configuration. This gives Terraform an opportunity to inspect the state of the world and determine exactly what it needs to do to make the world match our desired configuration.

$ terraform plan -out=test.tfplan

From here, we inspect the command output (the infrastructure equivalent of a diff) of all the data sources and resources Terraform plans to create, modify, or destroy. If that looks good, the next step is to apply the plan.

$ terraform apply test.tfplan ... Apply complete! Resources: 11 added, 0 changed, 0 destroyed. Outputs: id = j-3UHI5QFY5ALNH master_public_dns = ec2-54-210-208-229.compute-1.amazonaws.com

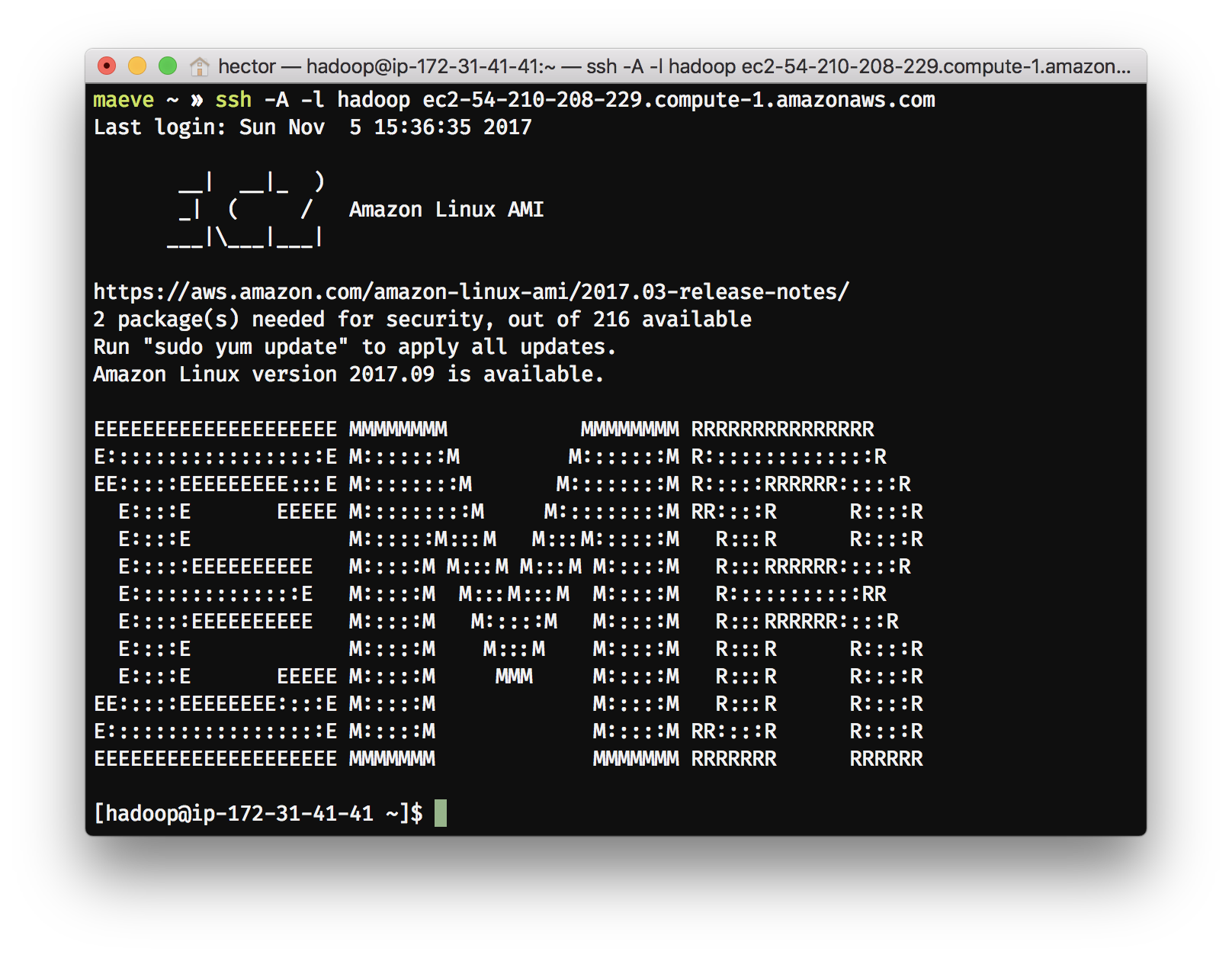

Now that we’ve got the head node FQDN, let’s SSH in and use the yarn CLI to ensure our cluster node count is accurate.

$ ssh -A -l hadoop ec2-54-210-208-229.compute-1.amazonaws.com [hadoop@ip-172-31-41-41 ~]$ yarn node -list | grep "Total Nodes" Total Nodes:2

Once we’re done with that, we’ll want to clean up all the AWS resources so that we don’t run up a huge bill.

$ terraform destroy ... Plan: 0 to add, 0 to change, 11 to destroy. Do you really want to destroy? Terraform will destroy all your managed infrastructure, as shown above. There is no undo. Only 'yes' will be accepted to confirm. Enter a value: yes ... Destroy complete! Resources: 11 destroyed.

Conclusion

We think the terraform-aws-emr-cluster is off to a decent start, and we hope that it can successfully replace all our past approaches to provisioning EMR clusters. We also hope that this post helps folks make sense of Terraform modules, Amazon EMR, and our desire to combine the two to make our lives easier.

If you’re interested in learning more about Terraform, some really thorough guides exist within the official Terraform documentation. Also, Yevgeniy Brikman has done a great job assembling a comprehensive guide to Terraform on his company blog. If you’re interested in learning more about Amazon EMR, the official AWS documentation covers a ton of ground.

Lastly, if you’re a user of Amazon EMR and Terraform, and get an opportunity to make use of our module, please let us know what you think.